When we switched to Mongo Atlas Cloud some time ago, we did so because of various issues we were facing at the time. From this change, we expected not only the resolution of performance and stability, but also new features. The first big tweak, available only to cloud clients, that we decided to implement, was Mongo Atlas Search. Why? Because if you’ve ever worked with databases like Elastic for full-text features and advanced indexes, then you know it’s really challenging…

How does Elastic work?

At the beginning, you have data – for example, blog articles in an existing database, such as MySQL. In order to be able to search them efficiently and full-textually, you need to synchronize them to an Elastic database, which, of course, must be installed beforehand and operated somewhere. Maybe you know this, maybe you don’t, but Elastic requires a lot of RAM. When you finally deal with the nightmare of synchronization (creating an article, publishing an article, changing an article, deleting an article…), you basically get duplicate data – in the original database and also in the Elastic database.

But this is far from the end. You still need to expand the API to connect to the Elastic server, completely change queries and pull primary data from Elastic when searching. If you want to save space and synchronize only indexed fields, not complete data to Elastic, then you must combine the searched data with the parent database and pull the data that isn’t in Elastic according to the IDs.

These are all more or less one-time matters that can be managed. But then comes the operation – backup, updates and monitoring. You’ve brought a bane into the (until now) clean code in the form of other libraries and other business logic, and what can easily happen is that a new version of “something” in this stack can cause more wrinkles on your forehead and bring additional development costs.

There’s no doubt that Elastic and similar engines ultimately bring high user comfort and pleasure to our clients’ customers. However, it can be achieved more effectively. And what comes next is…

Mongo Atlas Search

I can vividly imagine what it looked like in the MongoDB development team when they invented Atlas Search. No syncs, no dramatic code changes, no extra running costs! A revolution!

Atlas Search is a search engine that’s part of the Mongo Atlas Cloud. It’s there without you, as a software developer, lifting a finger. It saves you all the woes, is functional and ready to use with a simple extension in aggregations. If you’re thinking, “Elastic is great because it’s built on Apache Lucene!”, you probably don’t see a reason to change. However, you may be convinced by the fact that Mongo Atlas Search uses exactly the same indexing system – Apache Lucene.

How to set up Atlas Search and make it work?

At the beginning, it’s necessary to understand how Mongo Atlas Search works. Indexes are the base. You can set them up via the cloud web interface. You choose the cluster, database and collection you want to index. You either click it out or use a definition that you write in the JSON format. I prefer the JSON format, as it gives me more options and more control over what and how to index.

Let’s see the basic settings using the example we implemented on MediaManager search (an application for managing media, such as photos and documents). Each photo has a title and a description. These are the attributes that a customer wants to search in. So the definition of an index looks something like this:

For each index, Mongo records its name, indexing status and attributes of the data that are part of the index. The definition of this index is as follows:

{

"mappings": {

"dynamic": false,

"fields": {

"_description": [

{

"type": "string"

}

],

"_name": {

"analyzer": "lucene.standard",

"multi": {

"filenameAnalyzer": {

"analyzer": "filenameAnalyzer",

"type": "string"

}

},

"type": "string"

},

"app_id": {

"analyzer": "lucene.keyword",

"searchAnalyzer": "lucene.keyword",

"type": "string"

},

"created_date": {

"type": "date"

}

}

},

"analyzers": [

{

"charFilters": [],

"name": "filenameAnalyzer",

"tokenFilters": [

{

"type": "lowercase"

}

],

"tokenizer": {

"pattern": "[ _\\-\\.]+",

"type": "regexSplit"

}

}

]

}In the mappings object, you define the attributes of the document that you want to index using Atlas Search. At the same time, you also tell it how to index them. The basis is a string, a number, a boolean, a date, or specialties such as autocomplete. The complete list can be found in the official documentation.

For each attribute, you can mark its type and define the so-called analyzer. It’s a way for a search engine to break data (string) into so-called tokens. We use lucene.standard, which is default, or lucene.keyword, if it’s a keyword and its exact match must be found in the search.

Try your own analyzer!

Standard analyzer breaks down the query into words whose separator is a space. But what do you do if, like us, you want to search in file names, which can also look like this: Petra-Vlhova-3.jpg? After all, you need to find this image with the expression petra as well as vlhova. Therefore, you define your own analyzer that breaks the string according to the regular expression [ _\-\.]+, i.e. according to spaces, dashes, underscores and fullstops. As a bonus, you set up the analyzer to rewrite the entire name in small letters thanks to the lowercase filter.

This way, you can add multiple analyzers to one attribute and then decide which one you want to search with when searching.

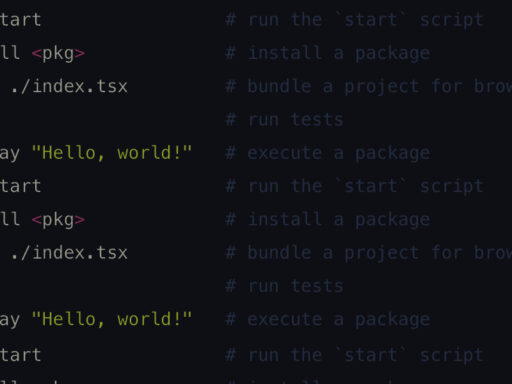

And how do you actually search? Easily!

Simply enrich an existing aggregation pipeline with a $search stage. In it, you define which index you want to use and which operators you want to search with. You have a choice from fulltext through autocomplete to geo search or a phrase. In addition, you can of course enhance each of these operators – for example, add a fuzzy algorithm (to accept errors and typos), or change the resulting importance. All this is precisely described in the MongoDB documentation.

The results are sorted according to the relevance of the search engine, taking into account the adjusted importance. What’s new is that you can also use an indexed date or number columns to sort the results. This, by the way, is quite breaking news.

How do we search in files?

- We use a compound operator, which allows us to define more rules.

- First, we filter the data by application that called the MediaManager.

- Subsequently, we use “should”, i.e. the conditions of which the searched documents should meet at least one.

- These conditions are again a “compound” operator, where we use “must” between individual terms of the searched string.

- The results will be sorted by the time the file was added.

The resulting aggregation pipeline then looks like this:

[

{

"$search": {

"compound": {

"filter": [

{

"text": {

"path": "app_id",

"query": "pages"

}

}

],

"should": [

{

"compound": {

"must": [

{

"text": {

"path": "_name",

"query": "petra"

}

},

{

"text": {

"path": "_name",

"query": "vlhova"

}

}

]

}

},

{

"compound": {

"must": [

{

"text": {

"path": {

"value": "_name",

"multi": "filenameAnalyzer"

},

"query": "petra"

}

},

{

"text": {

"path": {

"value": "_name",

"multi": "filenameAnalyzer"

},

"query": "vlhova"

}

}

]

}

}

],

"minimumShouldMatch": 1

},

"sort": {

"created_date": -1

}

}

},

{

"$skip": 0

},

{

"$limit": 10

},

{

"$project": {

"name": 1,

"description": 1,

"scoreDetails": {

"$meta": "searchScoreDetails"

}

}

}

]And the fact that it’s only an aggregation stage doesn’t prevent you from working with the results at all. Feel free to add $limit, $skip or even $unwind, $group, $project and so on. Mongo Atlas will take care of all the boring work associated with indexing, synchronization and operation of a search engine. And you and your client’s end customers can enjoy the search revolution 🙂