Application testing is still an unknown concept for many developers and companies. If you say testing, you usually mean “clicking” through it manually before delivery. This procedure fails if it’s a regularly evolving system that is constantly expanding. New features can break the old ones, or they can change the behavior of seemingly unrelated parts of applications. In this way, we are caught in a vicious circle of developing new functionalities, maintaining old ones and fixing functions that seem to break down accidentally. Exiting this cycle is possible, for example, by automated testing of applications, which we at Bart use on the Sportnet platform.

Why actually test?

You’re probably saying you don’t need tests. Welcome to the club, I didn’t need them either – I wasn’t led to test, no one taught me to write tests, nor did they explain why I should test the code. Why write an automated test for something I’m developing right now? After all, I know it works. I’ve finished a feature and I’m sending it to the client for testing. Here and there we need to adjust a small thing, add something somewhere, remove something somewhere, change a calculation, but otherwise we can deploy it to the live version…

After a few hours, the client calls that something isn’t working. I’m convinced that everything was fine, but I suppress the huge desire to say one of the sentences: ‘it worked for me’, ‘I didn’t change anything’ or ‘it probably went wrong after all the comments were incorporated’ and I prefer to fix the bug and click on deploy again. And then again. And again…

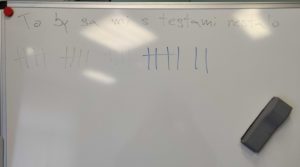

My colleagues and I have experienced a similar scenario so many times that we set up a whiteboard in the office, to which we added a little line every time something similar happened. We identified missing tests as the source of the problem.

After a few tens of lines, we ran out of the board space. So we stopped writing them down and we started to really deal with testing.

Testing methods

There’s plenty of types and methods of testing. In the context of testing web applications, we focused on 4 of them, namely:

- manual testing,

- E2E, a.k.a ‘end to end’ testing,

- integration testing

- unit testing.

Manual testing takes place “by hand” – test scenarios must be run over by a human tester every time. This testing is characterized by the longest and least accurate feedback for the developer, but at the same time it’s the only real software testing, because automated tests can only be considered as tools that verify whether the system works as specified.

The problem of automating manual testing is solved by E2E tests, where the tester re-writes a scenario, which is then executed by automatic software. The scenario might look, for example, like this:

- go to the address http://www.***.sk/login

- click in the text box for the login name

- enter login name

- click in the text box for the login password

- enter password

- press the ‘login’ button

- expect ‘you are logged in’ to appear on the screen.

If such a test passes, our functionality probably works. However, this does not include multiple specific cases, such as not having an activated account. We would have to write a separate test for that.

The automated tester will also not “notice” that even if the test passes, there may be something wrong with the page, for example, the CSS login form won’t appear at all because of inappropriate use.

So if we write a test for E2E, we usually cover the so-called ‘happy path’ and thus the most likely expected scenario.

Integration testing is usually no longer performed by testers, but by the developers themselves. This is testing to check whether larger pieces of software work together. An example of this can be testing whether data is written in the database after registration and whether we can subsequently read it from there without any problems.

Unit tests test the program flow in individual functions at the lowest possible level and check all possible scenarios that may occur during the execution of functions. The ideal unit test is independent from other tests and the rest of the tested program. In order to achieve independence, auxiliary objects are created that simulate the assumed context in which the function will be run. We call this procedure mocking.

I admit that in some cases I don’t see the difference between integration and unit testing and I am not the only one. But it doesn’t matter what the tests are called, what they do is what matters.

It’s not important what you call it, but what it does – Gojko Azdic

Pyramid or ice cream?

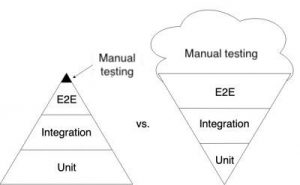

The following figure shows two different approaches to the ratio of different types of automated tests within one project.

With the recommended ‘pyramid’ procedure, we test the most using unit tests, which form the basis of all testing, and we test only the minimum manually.

The second approach, ’ice cream cone’, focuses mainly on manual testing and E2E testing. The main idea of this approach is that if we cover a part of the software manually or with an automated E2E test, all sub-parts must definitely work.

The ice cream cone procedure is especially liked by:

- developers, as most of the work is shifted to testers,

- managers, because these tests simulate real behavior and therefore they know how it will affect the end user,

- testers, because they don’t have to worry about whether they will test all the options, but they only test the minimum.

Source: https://testing.googleblog.com/2015/04/just-say-no-to-more-end-to-end-tests.html

For both approaches, the ice cream cone and the pyramid, it stands that the higher we move through them:

- the longer feedback the tests have,

- the tests are more vague,

- it’s increasingly complicated to set up the environment in which they run,

- it’s more difficult to find out from a specific failing test exactly where the error occurred,

- they cover larger parts of the software,

- they fail more often, even if nothing changes for the user.

At the same time, for convenient development, developers demand the exact opposite, namely:

- Fast feedback – the ability to run tests even after each code change locally on their computer with results available in a few milliseconds to seconds.

- The specific cause of failure – if a test fails, it isn’t necessary to debug at length, on the contrary, it’s clear in which specific place the test failed and why (expected vs. real value).

- Test isolation – tests aren’t affected by external influences and their output doesn’t change in time, so their result should be the same when run in any order, at any time and on any machine.

These requirements are best covered by unit testing, leading to the preference for a ‘pyramid approach’ of automated testing. This proved to be more effective in our company as well, and after only a few months it saved us tens of hours of cyclic fixing.

More tests, lower costs

You may be concerned that testing will take you a long time, that you won’t be able to meet deadlines because of it, or that you will have difficulty defending the increased costs for the client. In all cases, you are right. BUT remember that the primary goal of (good) developers and development companies is to deliver high quality software that, in the long term, fulfills the task for which it was programmed.

It should not be a matter of money or time. If you need tests to deliver long-term sustainable software, they must be included in the price of development, just like the writing of the code itself.

We could shift our point of view and say that if we don’t write tests, we cause damage to the client, because in the end they will pay more money than they would have to, due to the cyclical fixing of functions.

In conclusion, I would perhaps just note that if you think you can deliver quality software without tests, then just don’t do them. We gave them a chance and it paid off for us (and our client).