In the first part of the E-Shop speed optimization, we introduced tools that help us monitor the status of a website and find speed issues. In this section, we’ll take a look at website optimization on the backend side.

Working with a database

Non-optimal work with a database can slow down e.g. retrieving data from the API or slowing down server responses. Let’s look at a few techniques that can be used to deal with such slowdowns. The examples will cover MySQL, but the following principles can be used in other types of databases as well.

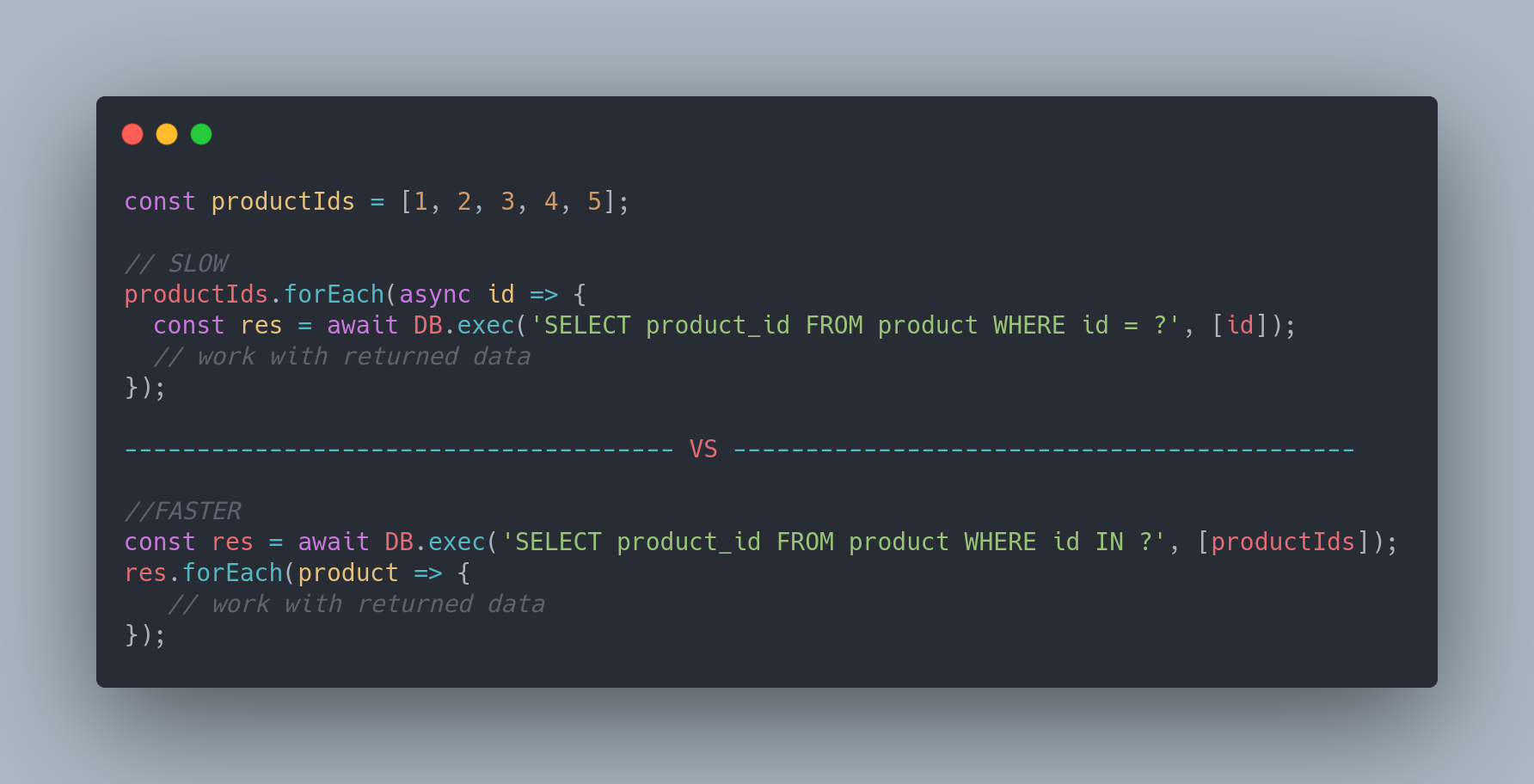

Reduction of the number of queries

The fewer queries are made to the database, the better. First example is about extracting data to multiple products. Instead of going through each ID and requesting the data separately, we can retrieve all that data together and then just iterate over the answer.

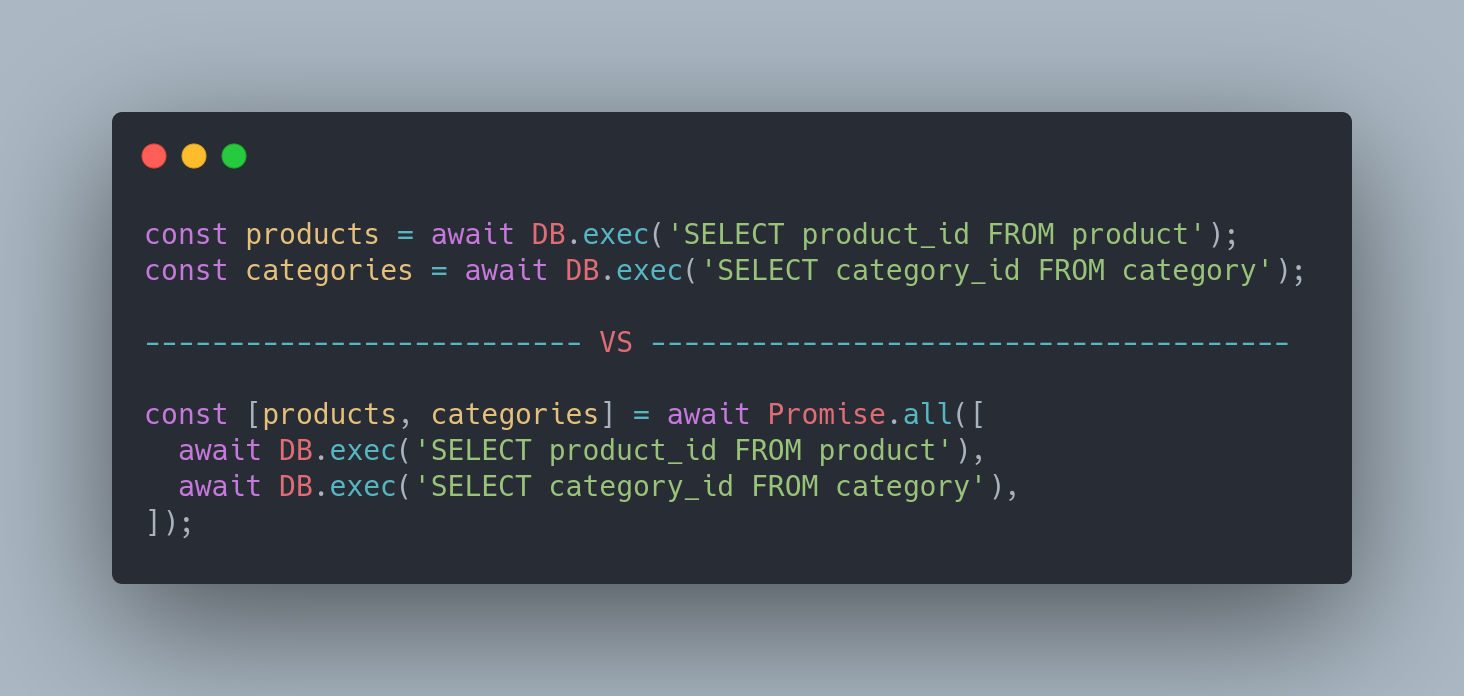

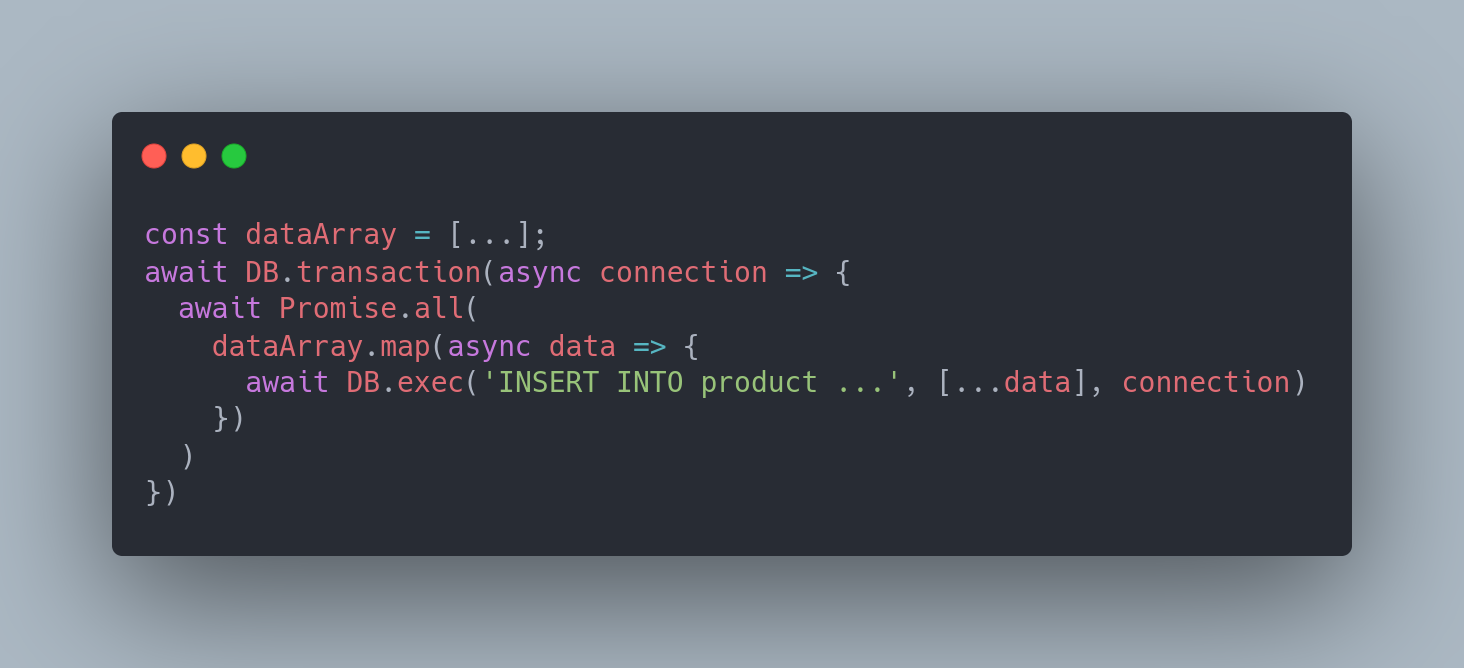

Second case concerns the loading of independent data together in Promise.all. In case one of the queries might end in an error, we can also use Promise.allSettled, in which case we’ll receive successful answers even if one of the queries fails.

Indexes

Creating indexes that will speed up the reading of data from a database is a matter of course when optimizing the speed of the database. In MySQL, we can use the EXPLAIN function for more complex queries, where MySQL displays how the given query is processed. It can be used by adding EXPLAIN before any SELECT, UPDATE, INSERT, REPLACE, DELETE. If a database slows down, it’s possible to use the SHOW PROCESSLIST command to find out what operations are performed in the database and subsequently to optimize the given queries.

Connection to an external system

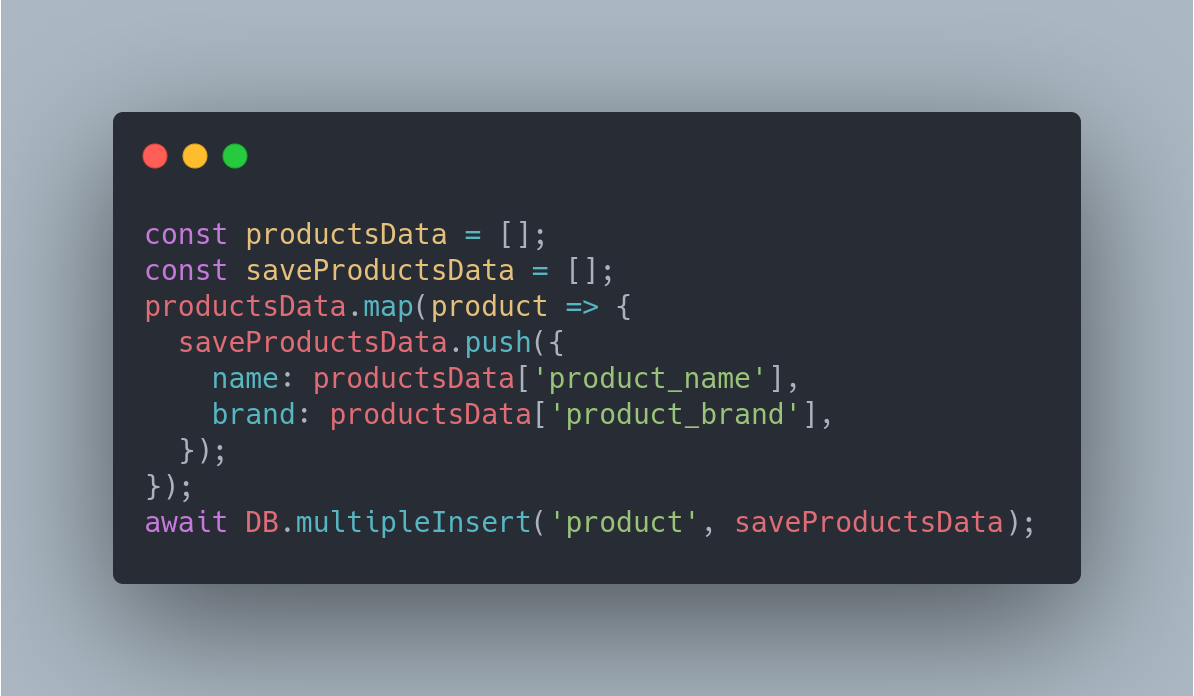

Very often, e-shops are connected to an external system, where data about products, prices, or stocks can be found. For larger e-shops, it can be a relatively large amount of data, and exactly when importing a larger amount of data, we can use INSERT to insert several rows into the database at once. As a result, fewer database queries are required. It’s important to check the data that we enter into the database, because if there’s an error in one row, the other data from INSERT won’t be written to the database either.

Using transactions will also speed up entering and updating data in the database, but the acceleration may only be visible with a larger amount of data.

Cache

Caching is an important part of modern websites and is a broad topic in itself. We’ll go over a few tips on how to cache more efficiently.

Cache-Control and ETag

The main cache header that we should use when requesting the server is Cache-Control. In this header we can specify the max-age value, which is given in seconds. Based on max-age, the browser can determine whether to query the data again on the server, or whether it’s enough to return the already stored local data. In case the cache has expired and it’s necessary to query the server for data, the ETag header will help us. The ETag is sent in the headers when the server responds, and when the same file is queried again, the ETag value is calculated on the server and if the values match, the content hasn’t changed and the server returns a 304 Not Modified response.

File names – file versions

We want to cache the files for as long as possible. That’s why it’s appropriate to set a long validity cache on e.g. the images on the website, and in case the image changes, edit the full file name or update its version. For example, if the image product-image.jpg changes after caching the image, can we change the file name to new-product-image.jpg or product-image.jpg?version=111111.

Files that won’t change

If we know that the contents of the file will definitely not change, we can use the immutable value of the header. With the given file, the browser will never query the server and will return a response from the local cache. We can force the given file to be reloaded by renaming it.

GZip

Gzip is a data compression utility. The data is compressed on the server and when sending the data to the browser, the same data is sent in a smaller size. Browsers support the unpacking of this data automatically, so as a result, we can achieve a smaller amount of sent data relatively easily. If you use Nginx on your server, HTML files are automatically compressed. It’s possible to enable gzip for other file types in the Nginx configuration by setting gzip_types. In addition, nginx also offers the setting of the minimum response gzip_min_length or for modification of the compression of responses from the proxy server using gzip_proxied.

Conclusion

In this section, we introduced specific optimizations related to the backend section, such as database optimization, caching and compression of transmitted data. In the next part, we’ll focus on frontend optimizations.

Sources and further reading

https://docs.nginx.com/nginx/admin-guide/web-server/compression/

https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Global_Objects/Promise/allSettled

https://iamakulov.com/notes/caching/

https://dev.mysql.com/doc/refman/8.0/en/explain.html